Federal Register Corpus (1994–2025)

Tomé, D. (2025). Deterministic Federal Register Corpus (1994–2025). Zenodo. https://doi.org/10.5281/zenodo.18288508

This page is the public surface of a 30‑year proof‑run: (In)Canon applied end‑to‑end across the modern Federal Register. The output is not “documents”. It is a deterministic structural record that turns verbatim authority into computable commitments while preserving absence.

What it proves is simple and rare: across three decades, administrative authority is overwhelmingly responsibility‑addressable. Once that responsibility floor exists, you can measure complexity, drift, and non‑executable authority without importing interpretation.

This parsing phase for the corpus required 480 compute hours. It is being released for free as a proof‑of‑work artefact. The commercial value is the engine that produced it: (In)Canon — a deterministic admissibility system you can license to run the same analysis on your own corpora.

Spanning 7,873 dated snapshots from 1994 to 2025, this corpus maintains a 99.99% Actor Presence rate. That is not “data quality” in the casual sense. It is a structural guarantee: a stable responsibility floor that lets you quantify dependency accretion, outcome absence, time binding, and cross‑reference drift without guessing what the law “means”.

Most compliance and governance work quietly depends on reconstruction: humans fill gaps, infer actors, and retrofit outcomes. This artefact shows where you don’t have to. With a responsibility floor in place, structural signals become measurable inputs: where authority is executable, where it is hollow, and where complexity has accreted beyond local readability.

Federal Register (verbatim history)

│

▼

(In)Canon lens (deterministic admissibility)

│

├─ Baseline of responsibility (actor presence floor)

├─ Accretion diagnostics (dependency / entanglement)

├─ Absence reporting (not stated stays not stated)

└─ Audit artefacts (hashes, versions, timestamps)

This public release is intentionally trade-secret safe: it distributes deterministic artefacts and baselines, not internal rule logic.

Baseline of Responsibility

Most public corpora cannot reliably answer the simplest operational question: who is responsible? They are anonymous, fragmented, or structurally inconsistent. The Federal Register is different.

This corpus demonstrates that across the modern era of the Federal Register, a responsible actor is explicitly identified at an extraordinary rate (99.99% Actor Presence). That makes the administrative state a rare kind of corpus: it is responsibility-addressable at scale.

Once you have that deterministic floor, you can measure every other structural property without inventing meaning: outcomes, time binding, dependency accretion, cross-reference debt, and the density of non-executable authority.

What this proves

- Responsibility is structurally present (not implied) at state scale.

- Large-corpus claims become computable without interpretation.

- The baseline becomes a reference surface for anomaly detection.

Why it matters

- Most “AI compliance” pipelines still rely on reconstruction.

- This corpus separates explicit responsibility from inferred responsibility.

- You can audit where authority becomes non-executable without guessing why.

Regulatory Thicket

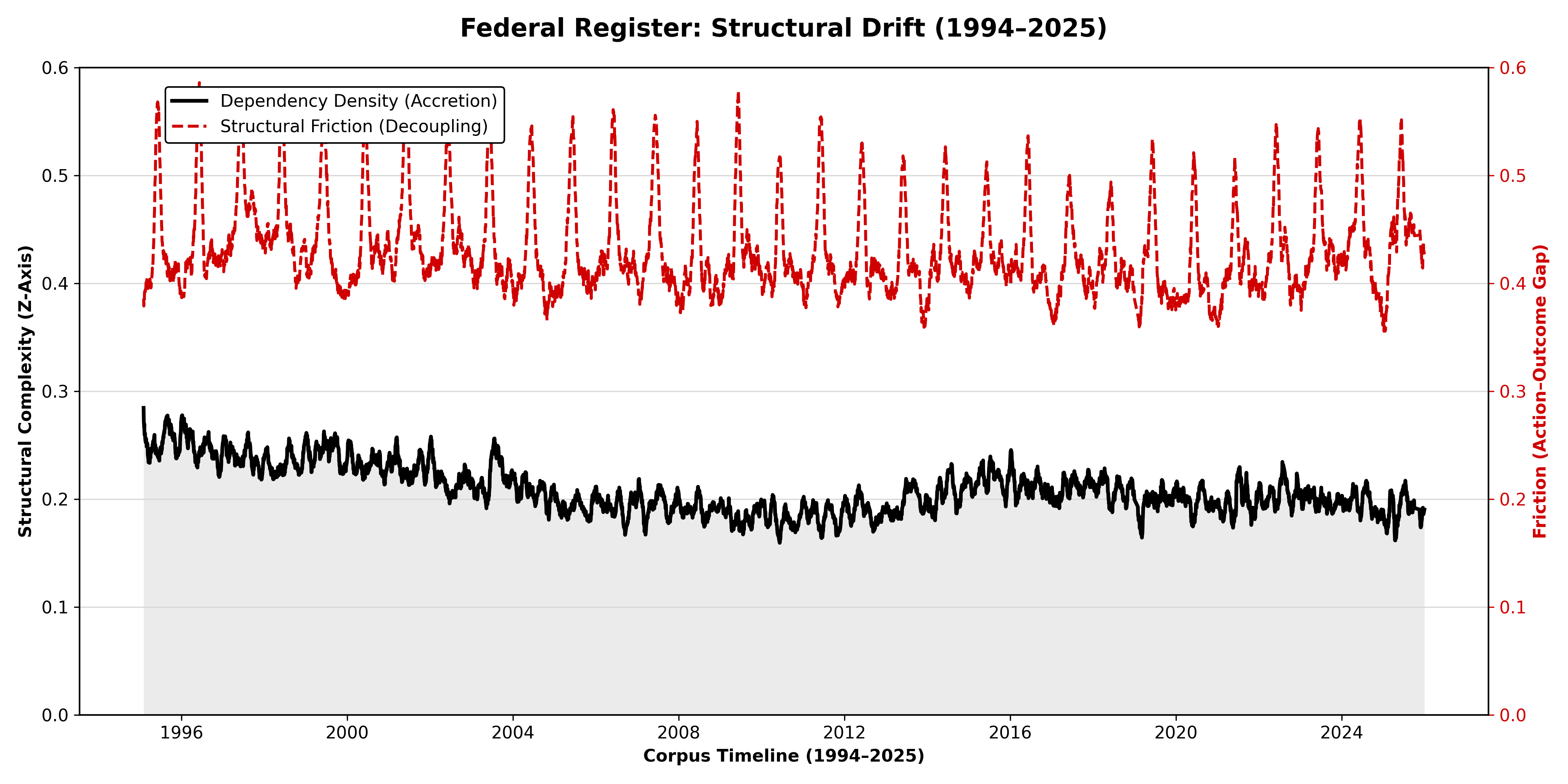

The dependency rate is the “interest factor.” It measures the degree to which present units rely on previously stated structure. At scale, this is not a metaphor: it is a quantifiable accretion of complexity.

Over time, regulatory text does not just grow—it entangles. Cross-reference dependencies become technical debt: the present becomes executable only by reconstructing the past. The dependency rate provides a numeric surface for that entanglement.

This corpus turns the Federal Register into a 3D map: time (X), volume (Y), dependency entanglement (Z).

The point is not “a chart.” The point is that the corpus makes drift observable at national scale: you can see when cross‑reference debt rises and when mandates decouple from defined outcomes — without importing anyone’s theory of why.

The Red Bridge

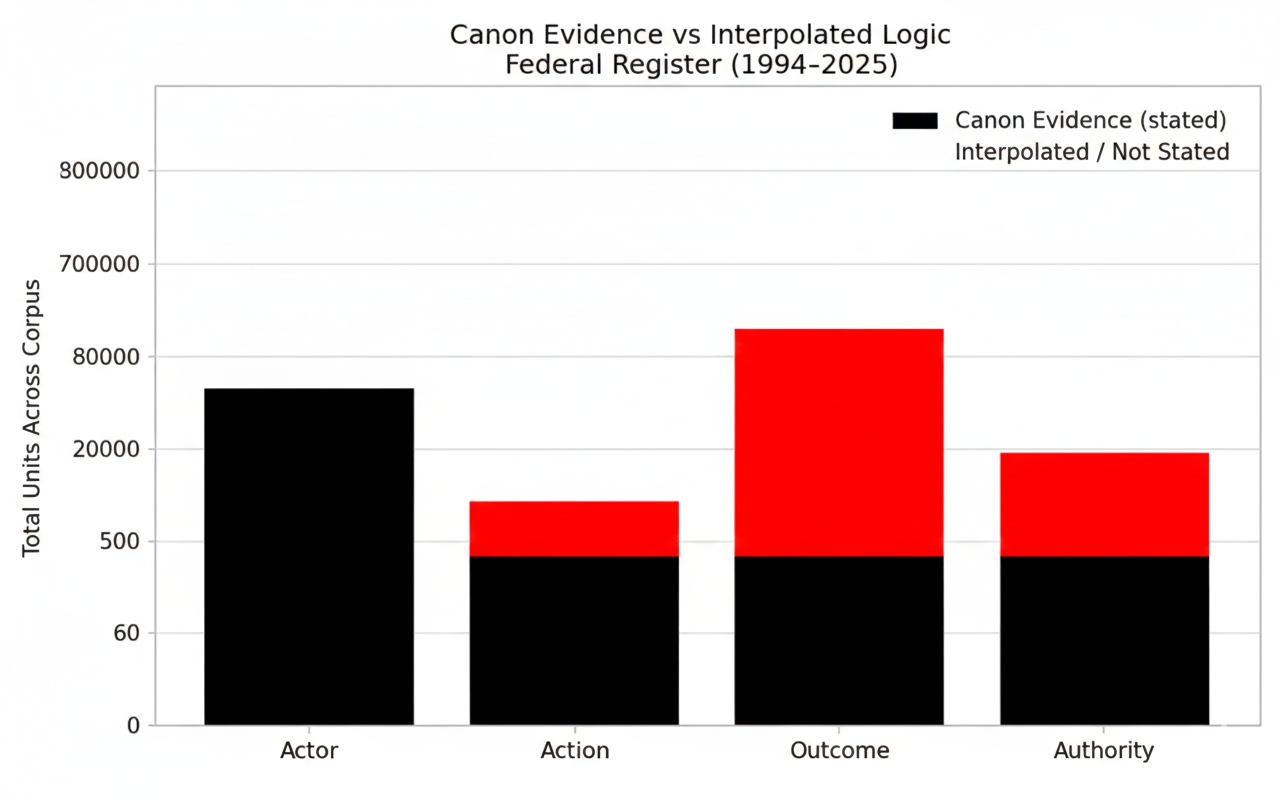

Readers reconstruct. Models reconstruct. Most pipelines quietly conflate reconstruction with evidence. (In)Canon does the opposite: it makes reconstruction visible by refusing to fill gaps.

Verbatim commitments latched from the source. Offsets preserved. Hashes recorded. Deterministic artefacts. This is the ground truth layer.

Any attempt to “complete” missing structure is an interpolation. We can build bridges for downstream use, but we never confuse bridges with ground truth.

We provide a 7,873-day sequence that explicitly distinguishes “what is stated” from “what would have to be inferred.” This is how you prevent structural hallucination from becoming institutional fact.

We don’t generate completeness — we report absence and preserve it.

Structural Rosetta Stone

The corpus is not a rewrite. It is a translation layer that turns “verbatim authority” into “deterministic commitments” without interpretation. That’s why it’s trade-secret safe: you can publish the artefacts and still protect the engine.

This release shows that a single deterministic admissibility system can ingest an authoritative state corpus and produce: (a) reproducible commitments with verbatim anchors, and (b) explicit absence reporting that never fills gaps.

The scope is frozen (1994–2025). The lens is consistent. The result is a proof-of-work artifact: one method applied end-to-end without interpretive drift.

Verbatim text (as published)

│

▼

Deterministic commitments (verbatim anchors + offsets)

│

├─ presence / absence (stated vs not stated)

├─ dependency linkage signals (cross-reference entanglement)

└─ audit bundle (hashes, versions, timestamps)

Downloads

The links below assume you upload the files into your site’s /assets folder using these exact filenames. Once uploaded, these links work without further HTML edits.

Not “documents.” A deterministic baseline that proves responsibility is explicitly named at scale, plus artefacts that preserve absence instead of filling it. This is the public demonstration surface for licensing the engine.

Integrity guarantees

Deterministic outputs

- Same input → same output (no stochastic behaviour)

- Stable artefacts support regression tests

- Audit bundles: hashes, versions, timestamps

Non-inferential handling

- No gap-filling (absence is preserved)

- Binary stated vs not stated reporting

- No scoring, weighting, or normative judgements in the corpus artefacts

Trade secret safe by design

Public artefacts show behaviour and outputs (what the system returns), but do not disclose internal rule logic, lexicons, or constraint sets that constitute the licensable engine.

The selling point is the boundary: Canon Evidence stays evidence. Everything else is explicitly marked as bridgework, not ground truth.

Licensing (In)Canon

This corpus exists to prove that (In)Canon can deterministically establish a responsibility baseline and structural diagnostics on an authoritative corpus at national scale. It is the proof surface, not the product.

(In)Canon can be licensed as an internal engine for:

- LLM pipeline gating (prevent schema-shaped hallucination)

- Regulatory / governance corpus structural baselining

- Dependency accretion diagnostics (“regulatory thicket” mapping)

- Evidence tooling (verbatim anchors + explicit absence reporting)

Use Contact to discuss licensing.

Boundary

- No legal interpretation

- No compliance conclusion

- No statement of correctness or adequacy

- No scoring or ranking presented here as “truth”

- No moral, political, or normative judgement

- No disclosure of internal rule logic

(In)Canon identifies structure and reports stated vs not stated. It does not assess meaning, correctness, quality, compliance, or adequacy.

(In)Canon identifies structure and reports stated vs not stated. It does not assess meaning, correctness, quality, compliance, or adequacy.